Best security practices for developer productivity

Organizations and enterprises must ensure robust security for their endpoints without causing any interruptions to their developers' experience and productivity.

This guide describes the best practices and features organizations can apply to consistently secure developers using ngrok.

The "Front Door" Method

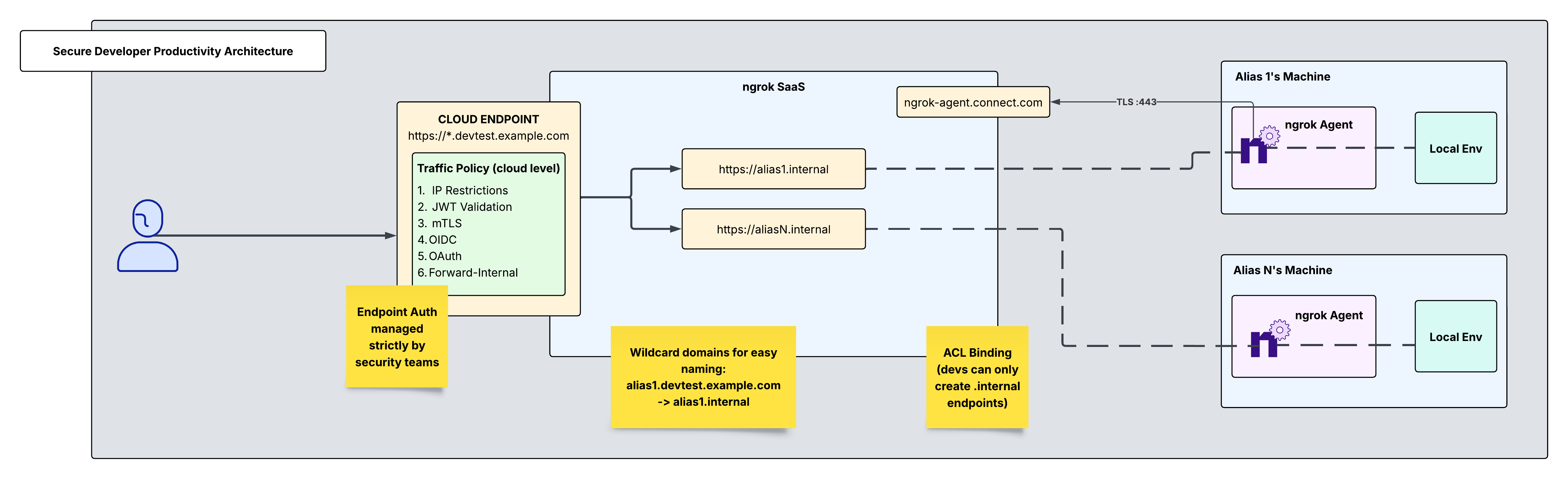

Instead of exposing individual developer endpoints publicly, let's implement a more secure approach: Creating a single, centralized cloud endpoint managed by security teams. This endpoint can be customized with traffic policies while keeping developer endpoints private and protected.

Architectural Reference

Create one ngrok account, managed by security or DevOps. The following can be managed centrally at this account's dashboard:

- Cloud endpoint traffic policies

- Authtokens and ACL restrictions

- Users and access control

Add developers as team members to your ngrok account

This will allow you to add each developer using ngrok to your central ngrok account. With RBAC (available on ngrok's pay-as-you-go plan), you also have the option to restrict their access to read-only.

Once the invite is sent, have the developer go to their email and sign up for their account which will be registered under the master account.

Create an authtoken for each developer

Create a tunnel authtoken for each developer. This authtoken will be specific to each user and will be their key to setting up internal endpoints. Next, we will assign it an ACL rule. By assigning an ACL rule, you can ensure that this user with this authtoken may only create one internal endpoint bound to their alias. Navigate to the authtokens section on your dashboard and click "new authtoken" and set the description, set the owner to the developer email, and assign it an ACL rule as shown below:

Developer installs ngrok agent and defines an internal endpoint in ngrok.yml

Have the developer install the ngrok agent and then have them run the command below in order to ensure their agent uses the proper authtoken you provisioned for them in the previous step.

Loading…

Internal Endpoints are private endpoints that only receive traffic when forwarded through the forward-internal traffic policy action. This allows you to route traffic to an application through ngrok without making it publicly addressable.

Internal endpoint URLs must:

- End with the .internal domain extension

- Use the internal binding

Each developer will have their own configuration file for their agent installed on their machine. As shown below, each config file will contain an internal endpoint unique to that developer's alias. The configuration file can be found at /path/to/ngrok/ngrok.yml. Have the developer add their internal endpoint in their configuration file. The final config file should look like this:

Loading…

Within this configuration file, developers have the ability to add any further traffic policy actions that may aid them in their testing.

In this way, developers only handle private endpoints and don't have permissions to alter any configurations on public endpoints.

Reserve a custom wildcard domain for your public cloud endpoint

Creating a custom wildcard domain is the first step in creating a public cloud endpoint to receive traffic on any subdomain of your domain. Navigate to the domains section in your dashboard and click "new domain". Any naming convention you use for this domain will require proper DNS and CNAME records. These targets are returned after domain creation and can take 5-10 mins for ssl to be established.

Create a public cloud endpoint and traffic policy

Cloud Endpoints are persistent, always-on endpoints whose creation, deletion and configuration is managed centrally via the Dashboard or API. They exist permanently until they are explicitly deleted. Cloud Endpoints do not forward their traffic to an agent by default and instead only use their attached Traffic Policy to handle connections.

This cloud endpoint will multiplex across several internal endpoints which point to their corresponding local development environments.

You can use traffic policy to forward traffic from your cloud endpoint to the correct internal endpoint based on the hostname of the cloud endpoint that developers make requests to. Since the cloud endpoint is set up as a wildcard URL, any text placed where the wildcard is will forward to its corresponding internal endpoint.

alias1.devtest.example.com → alias1.internal → alias1's local env

So, all alias1 has to do is go to that cloud endpoint URL in their browser and they will be able to access their local environment. Same goes for alias2, alias3, and aliasN.

To achieve this dynamic routing, replace the default traffic policy on the cloud endpoint in the dashboard with the following:

Loading…

Secure your cloud endpoint

Now that traffic is forwarded correctly from the cloud endpoint to its corresponding internal endpoints, you can layer on security to the public cloud endpoint. There are a variety of traffic policy actions to choose from to achieve this. Listed below are YAML snippets and curl commands below for how to enable IP Restrictions, JWT Validation, and mTLS. There are many other actions to choose from which can be found in Traffic Policy Actions. These actions will be added to your existing traffic policy config, preceding the forward-internal action.

IP Restrictions

For developers with a designated source IP, you can use the restrict-ips action to allowlist trusted source IPs where only these IPs will be able to access this cloud endpoint.

Loading…

JWT Validation

You also have the option to send a JWT to ngrok, specify an allowlist of issuers/JWKS URLs, and ngrok will validate the JWT.

Loading…

mTLS

If you would rather use a CA or PEM version in this scenario, you can also enable mTLS where TLS termination will happen at the ngrok cloud edge.

Loading…

For all of these options, you can either use one or layer multiple actions onto the cloud endpoint.

Create a custom connect URL

Custom connect URLs are available with ngrok's pay-as-you-go plan. This provides a white-labeling capability so that your ngrok agents will connect to connect.example.com instead of the default connection hostname (connect.ngrok-agent.com). Dedicated IPs that are unique for your account which your agents will connect to are also available. This takes away any danger of rogue agents in your network trying to call home and adds an additional layer of security by specializing your ngrok connectivity.

Loading…

Once you have created the custom connect url, specify this field within the agent configuration file. Add this section to your agent configuration file to specify the custom connect url:

Loading…

Recap

You have now integrated a system that allows your security team to centrally manage and secure a cloud endpoint which developers can seamlessly use to access applications running in their local environments. Let's recap what what you've built:

- One ngrok agent per developer machine - ACL restricted authtokens provisioned by security specialists for developers.

- Local, uninterrupted development and testing for engineers, made securely available via cloud and internal endpoints.

- Granular access with a composable traffic policy offering refined and robust security measures for a singular public cloud endpoint.